Local Gay Apps

Find the Gay Singles near you

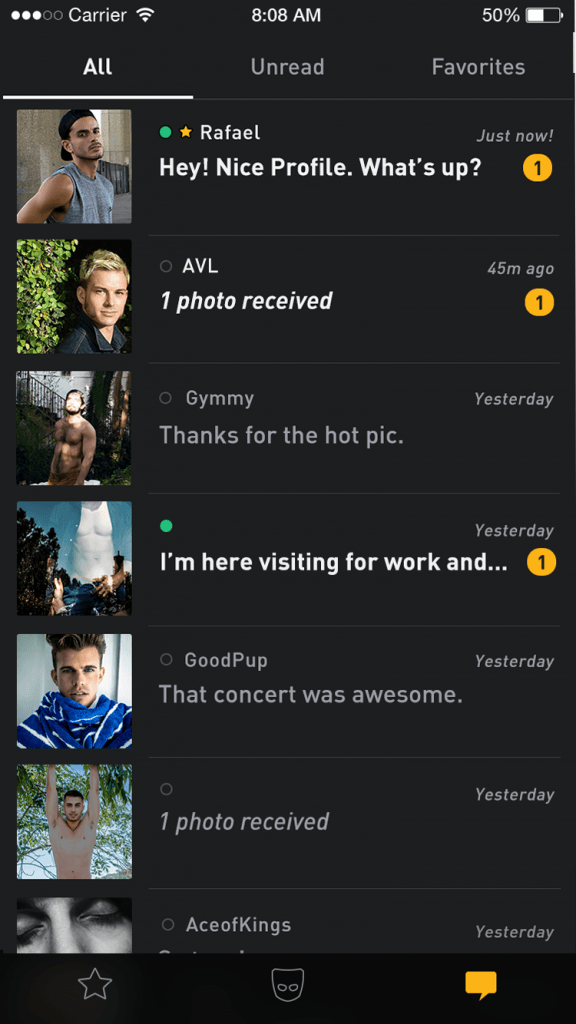

SCRUFF is the top-rated and most reliable app for gay, bi, trans and queer guys to connect. More than 15 million guys worldwide use SCRUFF to find friends, dates, hookups, events, travel tips, and more. SCRUFF is an independent, LGBTQ owned and operated company, and we use the app that we build. By its very premise, the local gay dating app requires the guys to have a smart phone, Blued does not assumes that its users are gay, trans, bi or whatever. No surprise here! No surprise here! However, Blued is the only dating app that was actually created by and for queer men.

Now that gay men are finally starting to get legal marriage rights, and social/cultural respect, the number of publicly gay personal ads has blossomed. If you are looking for local men seeking men, MyLocalCupid.com allows you to instantly view local singles quickly and totally free of charge.

You can also use our site to learn about dating tips, online dating etiquette, and how you can protect your online privacy.

Meet local gay singles in your area by browsing below:

Search For Gay Singles

Search By Category

Resources

Reckless associations can do very real harm when they appear in supposedly neutral environments like online stores

As I was installing Grindr on my Android phone yesterday, I scrolled down to take a look at the list of 'related' and 'relevant' applications. My jaw dropped. There, first on the list, was 'Sex Offender Search,' a free application created by Life360 that lets you 'find sex offenders near you and protect your child ... so you can keep your family safe.'

I was flabbergasted. How and why was this association being made? What could one application have to do with other? How many potential Grindr users were dissuaded from downloading the application because they saw this listed as a related application? In essence: Who did this linking, how does it work, and what harm is it doing?

For those who don't know, Grindr describes itself as a 'simple, fast, fun, and free way to find and meet gay, bi and curious guys for dating, socializing, and friendship.' It's one of an emerging set of location-based technologies targeted at gay men looking to socialize, where 'socialize' can mean a wide variety of things, including chatting, hooking up to have sex or developing a friendship. You start up the app and immediately see how close other users are and some information about them.

Grindr isn't unique or new in this respect. Manhunt, Jack'd, Scruff and Maleforce all have iPhone or Android apps, and sites like GayRomeo.com, Adam4Adam.com and gaydar.co.uk have been letting gay/bi/curious men filter user profiles by geographic location for years. Such sites, applications, and the practices they make possible are becoming almost downright mainstream: Sharif Mowlabocus wrote a whole book on what he calls 'Gaydar Culture'; Online Buddies (the makers of Manhunt) partners with academics to conduct innovative world-wide research on online gay male practices; and Grindr CEO Joel Simkhai was a panelist this year at SXSW Interactive.

Bizarre links between Grindr and Sex Offender Search can be great starting points for those who recognize nonsensical associations. But what about those who don't?So if all of this is becoming so seemingly mainstream, why is it being linked to sex offenders?

To answer the first question of who made this association between Grindr and Sex Offender Search: it is those who design and maintain the Android Marketplace, the Droid's version of Apple's App Store. To be fair, Apple has had its own struggles deciding what kinds of apps to sell: for a while it rejected a cartoon app created by Pulitzer Prize-winning Mark Fiore because it 'ridiculed public figures'; and it recently deleted an app designed by a religious group to 'cure' gay people. The problem of deciding what to sell through the app store is not new. But we're far from fully understanding the ethical obligations that Apple, Google and others have when their platforms act as de facto regulators of free speech.

The second question -- how was this association made -- is harder to answer. Google, Microsoft, Apple and Facebook don't make public the systems and algorithms they use to create relationships among data. Is the Android Marketplace noticing a large overlap between those who download Grindr and those who install Sex Offender Search? This seems unlikely given their two very different target audiences.

Was this an editorial decision made by a human curator of the Marketplace who thought the two applications were somehow related? This curious choice would say more about the curator than the applications.

Local Gay Apps List

Does some part of the system consider the applications' Marketplace categories -- 'Social' for Grindr and 'Lifestyle' for Sex Offenders Search -- so similar that it thinks users would be interested seeing connections between the categories? This is plausible but there are many other applications in both categories that might be linked -- why these two?

Finally, are terms the application creators themselves use to describe their programs considered similar through some automatic keyword matching algorithm? Sex Offender Search lists these key words: sex offender search, sex offenders, megan's law, megan law, child molesters, sexual predators, neighborhood safety, criminals, Life360, Life 360. The description of Grindr lists no keywords but says that it 'only allows males 18 years or older' to download the application and that '[p]hotos depicting nudity or sex acts are strictly prohibited.' The only word common to both applications is 'sex.' But the word 'sex' also appears in Deep Powder Software's 'Marine Biology' application. It's hard to see the overlap among these three applications yet, for some reason, the Marketplace thinks the most relevant application to Grinder is Sex Offender Search.

The last question -- what harm are such associations doing -- is more complicated to answer. Associations like those listed in the Android Marketplace (or Apple's Genius system, Amazon's recommendation engine or Bing's search suggestions) can be starting points for good conversation or chilling silencers of individual expression and community identity. To be starting points for conversation, designers must first acknowledge that recommendation systems (both those that are run by humans and those relying upon algorithms) have the power to suggest and constrain expression. Bizarre links between Grindr and Sex Offender Search can be great starting points for those who are privileged enough to recognize nonsensical associations, possess enough technical knowledge to understand how such systems might make links, and have the confidence and communication skills to argue the point with friends, family members and others. These can be great opportunities to debunk bad thinking that would otherwise go unchallenged.

But if we think that technologies are somehow neutral and objective arbiters of good thinking -- rational systems that simply describe the world without making value judgments -- we run into real trouble. For example, if recommendation systems suggest that certain associations are more reasonable, rational, common or acceptable than others we run the risk of silencing minorities. (This is the well-documented 'Spiral of Silence' effect political scientists routinely observe that essentially says you are less likely to express yourself if you think your opinions are in the minority, or likely to be in the minority in the near future.)

* * *

Imagine for a moment a gay man questioning his sexual orientation. He has told no one else that he's attracted to guys and hasn't entirely come out to himself yet. His family, friends and co-workers have suggested to him -- either explicitly or subtly -- that they're either homophobic at worst, or grudgingly tolerant at best. He doesn't know anyone else who's gay and he's desperate for ways to meet others who are gay/bi/curious -- and, yes, maybe see how it feels to have sex with a guy. He hears about Grindr, thinks it might be a low-risk first step in exploring his feelings, goes to the Android Marketplace to get it, and looks at the list of 'relevant' and 'related' applications. He immediately learns that he's about to download something onto his phone that in some way -- some way that he doesn't entirely understand -- associates him with registered sex offenders.

What's the harm here? In the best case, he knows that the association is ridiculous, gets a little angry, vows to do more to combat such stereotypes, downloads the application and has a bit more courage as he explores his identity. In a worse case, he sees the association, freaks out that he's being tracked and linked to sex offenders, doesn't download the application and continues feeling isolated. Or maybe he even starts to think that there is a link between gay men and sexual abuse because, after all, the Marketplace had to have made that association for some reason. If the objective, rational algorithm made the link, there has to be some truth to the link, right?

Now imagine the reverse situation where someone downloads the Sex Offender Search application and sees that Grindr is listed as a 'related' or 'relevant' application. In the best case, people see the link as ridiculous, questions where it might have come from, and start learning about what other kind of erroneous assumptions (social, legal and cultural) might underpin the Registered Sex Offender system. In a worse case, they see the link and think 'you see, gay men are more likely to be pedophiles, even the technologies say so.' Despite repeated scientific studies that reject such correlations, they use the Marketplace link as 'evidence' the next time they're talking with family, friends or co-workers about sexual abuse or gay rights.

The point here is that reckless associations -- made by humans or computers -- can do very real harm especially when they appear in supposedly neutral environments like online stores. Because the technologies can seem neutral, people can mistake them as examples of objective evidence of human behavior.

We need to critique not just whether an item should appear in online stores -- this example goes beyond the Apple App Store cases that focus on whether an app should be listed -- but, rather, why items are related to each other. We must look more closely and be more critical of 'associational infrastructures': technical systems that operate in the background with little or no transparency, fueling assumptions and links that we subtly make about ourselves and others. If we're more critical and skeptical of technologies and their seemingly objective algorithms we have a chance to do two things at once: design even better recommendation systems that speak to our varied humanities, and uncover and debunk stereotypes that might otherwise go unchallenged.

The more we let systems make associations for us without challenging their underlying logics, the greater risk we run of damaging who we are, who others see us as, and who we can imagine ourselves as.

Local Gay Apps Like

Editor's Note, 5:22 p.m. April 15, 2011: As some of our readers have challenged, in the comments below, that the association mentioned in the article ever existed, we've included a screenshot taken at the time this piece was written. Visitors to Grindr on the Android Marketplace today are no longer seeing Sex Offenders Search as a related app; the change occurred around 10 p.m. EST on Thursday, April 14 -- that's when the author noticed the switch after periodically refreshing the Marketplace following publication of this story.